Ensuring the trustworthiness and security of enterprise applications

The emergence of AI is revolutionizing the landscape of business operations, prompting numerous organisations to explore the potential of AI to enhance their efficiency and gain a competitive edge.

As AI advancements rapidly evolve, there is an increasing need for regulations to ensure the reliability of AI systems and prevent any harm they may cause. For instance, recent news reports indicate that Samsung workers made a significant mistake by utilizing ChatGPT which has now been banned in Italy due to privacy concerns.

In addition to worrying about data security, individuals using AI tools, such as ChatGPT, frequently ask themselves whether the answers provided by AI that appear to be correct are actually accurate. As users, it can be challenging to discern the correctness of AI-generated answers. In certain instances, an erroneous response from AI may result in significant adverse effects.

Therefore, after being dazzled by the remarkable capabilities of AI tools like ChatGPT, it seems necessary for us to take a step back and consider how to build responsible AI. Some companies, such as Microsoft, a partner of OpenAI, have proposed responsible AI principles to govern the design, building, and testing of machine learning models to ensure fairness, inclusiveness, reliability, safety, security, transparency, and accountability. Additionally, Microsoft has unveiled the Azure OpenAI service on its Azure cloud platform.

The Azure OpenAI service, which provides programmatic access to OpenAI language models, is now generally available and includes support for chat interactions with the GPT model. Microsoft has integrated OpenAI models with various services, including Bing, GitHub Copilot, and Microsoft 365, and is exploring the potential of using ChatGPT in enterprise-grade applications on the Azure service.

Restrict information only to those who are involved

Incorporating AI services such as ChatGPT in enterprise-grade applications requires stringent demands on data security and the accuracy of the AI-generated content. By using Azure OpenAI, customers can leverage the same models as OpenAI while benefiting from the security features of Microsoft Azure, such as private networking and regional availability.

Microsoft said it is hosting the OpenAI models on its Azure infrastructure, and all customer data sent to Azure OpenAI remains within the service. The prompts and completion data may be stored temporarily by the Azure OpenAI Service in the same region as the resource for a maximum of 30 days. It is important to note that this data is not sent to OpenAI, and it does not use customer data to train, retrain, or improve the models in the Azure OpenAI Service.

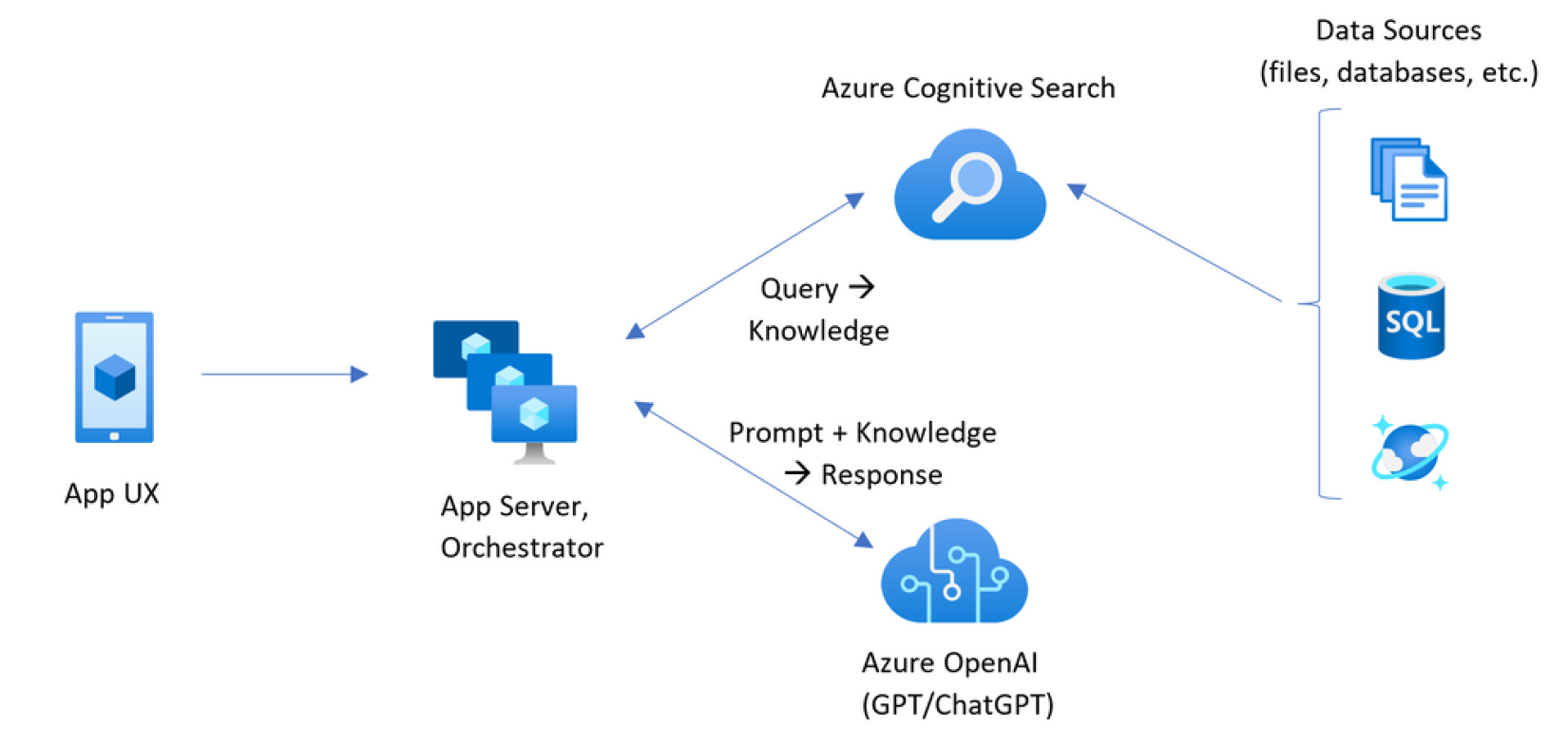

Moreover, to ensure data security and reliability, users can integrate Azure OpenAI service with other Azure services. For example, Microsoft demonstrated a demo where it integrated Azure OpenAI with Azure Cognitive Search service. The data of the knowledge base is stored in an Azure Cognitive Search index, which allows the implementation of various building blocks for security and filtering, such as document-level granular access control. In the demo, information about the project of an office move to enhance team collaboration is restricted to involved members only. If someone who is not involved types "Is there a move or office space change coming?", the AI-generated response will be "I don't know," indicating that no documents are available that provide information about the project.

Generate trustworthy responses and prevent abuse and harmful content

Another consideration for using AI in enterprise applications is how we can make responses trustworthy and prevent abusive and harmful content. One approach to ensuring trustworthiness is to cite the resources used to generate responses. In the demo from Microsoft mentioned earlier, it provides source citations for the facts presented, users can validate the information and gain confidence in the accuracy of the response.

Preventing abusive and harmful content requires a more proactive approach. This is where content filtering comes in. The content management system of the Azure OpenAI Service screens out potentially harmful content by running the input prompt and generated completion through a series of classification models. If harmful content is detected, the system either returns an error or flags the response as having gone through content filtering.

In summary, ensuring trustworthiness and preventing abusive content are crucial considerations when using AI in enterprise applications. By citing sources and utilizing content filtering, these applications can provide accurate and reliable responses while maintaining a safe and respectful environment for all users.

Jiadong Chen is a cloud architect at Waikato software specialist Company-X and a Microsoft Most Valued Professional.